publications

2025

- Factual Knowledge Assessment of Language Models Using DistractorsHichem Ammar Khodja, Abderrahmane Ait gueni ssaid, Frederic Bechet, and 3 more authorsIn Proceedings of the 31st International Conference on Computational Linguistics. Code , Jan 2025

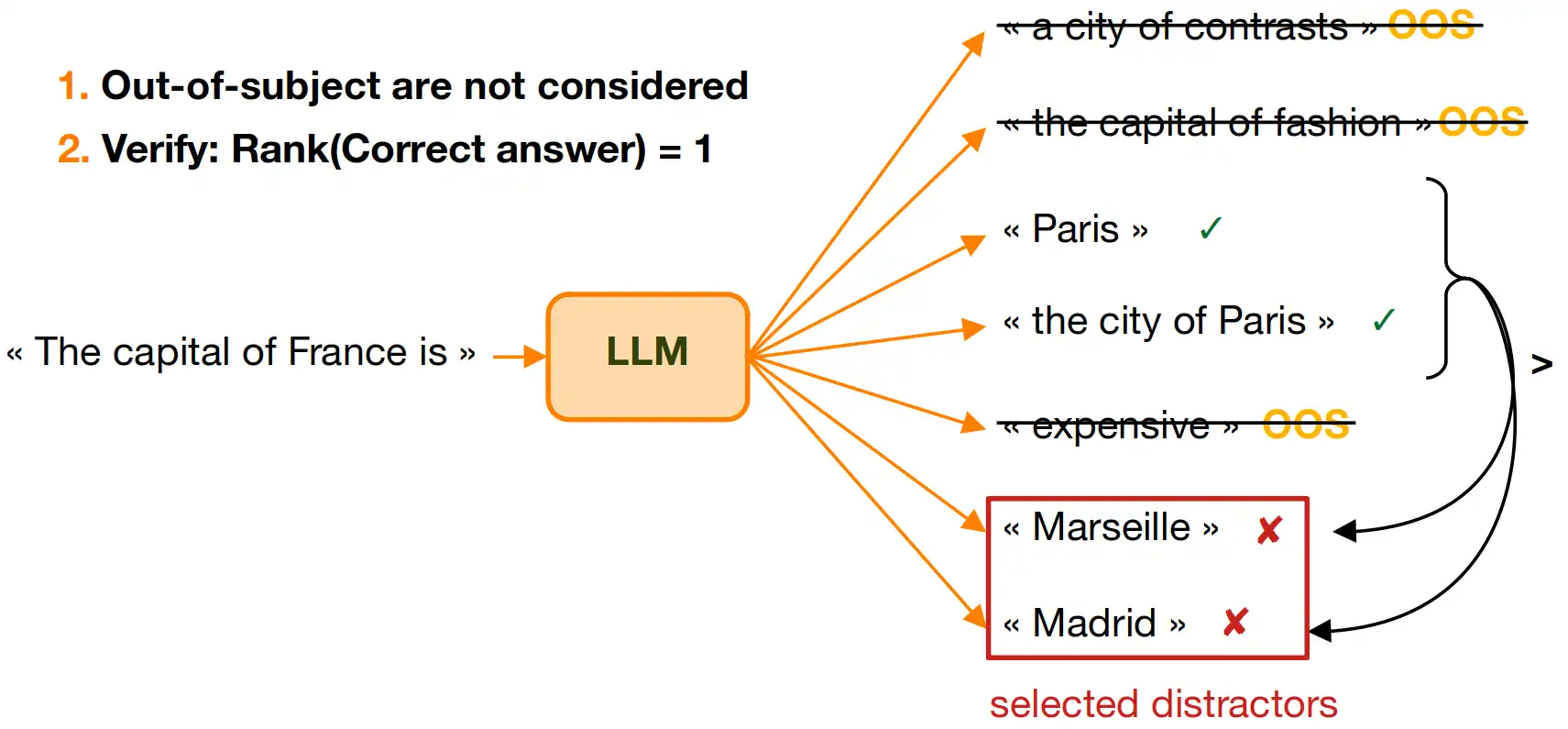

Language models encode extensive factual knowledge within their parameters. The accurate assessment of this knowledge is crucial for understanding and improving these models. In the literature, factual knowledge assessment often relies on cloze sentences, which can lead to erroneous conclusions due to the complexity of natural language (out-of-subject continuations, the existence of many correct answers and the several ways of expressing them). In this paper, we introduce a new interpretable knowledge assessment method that mitigates these issues by leveraging distractors—incorrect but plausible alternatives to the correct answer. We propose several strategies for retrieving distractors and determine the most effective one through experimentation. Our method is evaluated against existing approaches, demonstrating solid alignment with human judgment and stronger robustness to verbalization artifacts. The code and data to reproduce our experiments are available on GitHub.

@inproceedings{Factual_Knowledge_Assessment_of_LLMs_Using_Distractors, title = {Factual Knowledge Assessment of Language Models Using Distractors}, author = {Ammar Khodja, Hichem and {Ait gueni ssaid}, Abderrahmane and Bechet, Frederic and Brabant, Quentin and Nasr, Alexis and Lecorv{\'e}, Gw{\'e}nol{\'e}}, editor = {Rambow, Owen and Wanner, Leo and Apidianaki, Marianna and Al-Khalifa, Hend and Eugenio, Barbara Di and Schockaert, Steven}, booktitle = {Proceedings of the 31st International Conference on Computational Linguistics}, month = jan, year = {2025}, address = {Abu Dhabi, UAE}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.coling-main.537/}, pages = {8043--8056}, }